This post is part of a series on DevSecOps and CI/CD security. Check out the overview for context and links to the rest of the series.

This post lays the CI/CD foundation for other security-focused posts, covering:

The main goal of CI/CD is delivering high-quality, well-tested software quickly and consistently. If you’re new to DevSecOps and CI/CD or want a refresher, start here:

An Example Pipeline

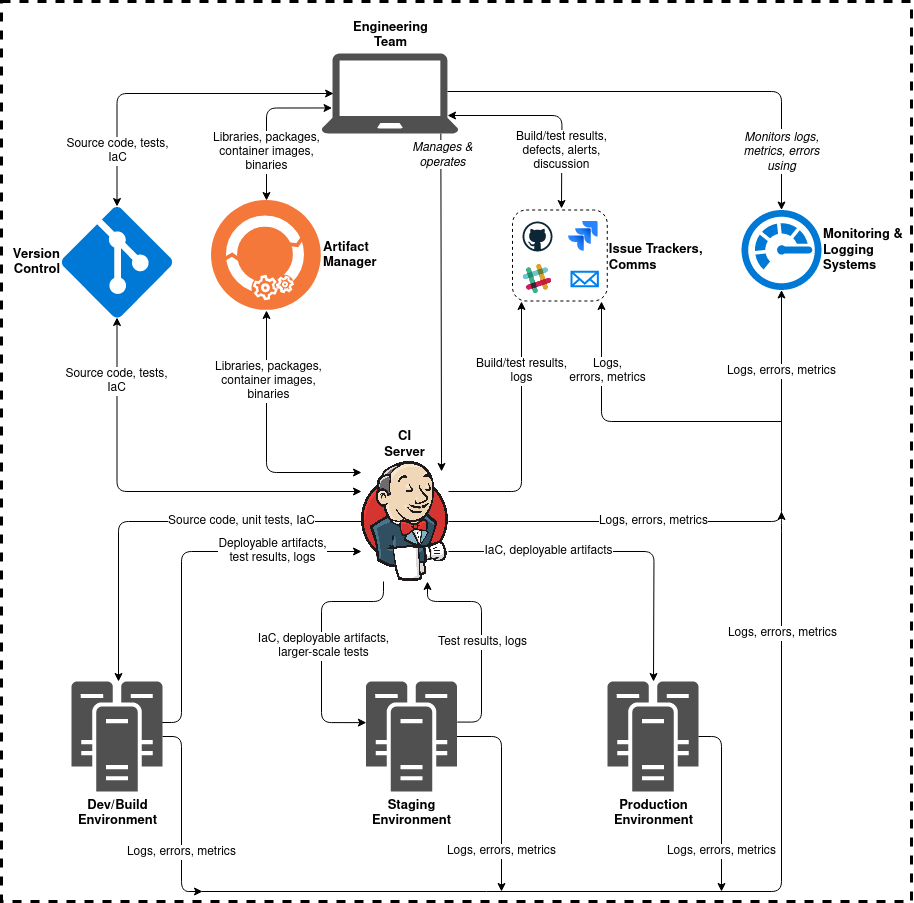

Here’s a simplified view of a typical CI/CD pipeline flow):

Other posts in this series will refer back to this example pipeline. Real CI/CD pipelines vary widely in design, tool selection and implementation.

Pipeline Components & Tools

Here are some key CI/CD tool categories, their purpose, and common tools in the category:

| Category | Purpose | Examples |

|---|---|---|

| CI server | Coordinate and run pipeline tasks | GitHub Actions, GitLab CI/CD, Jenkins, TeamCity, CircleCI, … |

| Version control | Store/manage source code, IaC, and related files | Git, Subversion, Perforce, … |

| Artifact manager | Manage compiled code, libraries/packages, container images, etc. | Archiva, Artifactory, Nexus, … |

| IaC tools | Automate environment provisioning and management | Terraform, AWS CloudFormation, Ansible, Chef, Puppet, Packer, Docker, … |

| Issue tracker | Track/manage software defects or issues | Jira, GitHub/GitLab issues, Redmine, Bugzilla, Mantis, … |

| Monitoring/logging | Monitor pipelines, environments, and applications | ELK stack, Graylog, Splunk, Loggly, Sumo Logic, … |

CI/CD Terms

Some common CI/CD terms:

- A CI/CD pipeline is the set of components that perform continuous integration/delivery/deployment. The pipeline revolves around the CI server.

- Infrastructure-as-Code (IaC) is executable code, configuration, and tools for automating infrastructure management. It differs from traditional interactive or script-based approaches to infrastructure management, emphasizing idempotence and repeatability.

- Continuous Integration (CI) involves frequent commits and merges to a version-controlled repository. A CI server builds, tests, and (sometimes) deploys the software.

- Continuous Delivery (CD) extends CI with human-controlled, automation-assisted deployment to production. Test suites are more complex, emphasizing integration/end-to-end testing and infrastructure-as-code.

- Continuous Deployment (CD or CD²) extends continuous delivery with self-executing automation. When new code is merged or tagged in a specific branch, a fully automated process builds, tests, and deploys to production without manual intervention. This depends on comprehensive, reliable testing and rollback capabilities.

CI/CD Characteristics

Here are some prominent features of typical CI/CD pipelines, and how the components fit together.

Heavy use of version control: source code, tests, IaC, and other files used to create software are version-controlled (usually with Git). Closely related: GitOps.

Heavy use of infrastructure-as-code (IaC): IaC tools abstract over infrastructure components that were traditionally managed manually or via scripts, such as:

- Disk images

- Configuration files and registry keys

- Services, daemons, and processes

- Inter-process communication mechanisms

- Group policy objects

- DNS entries

- Networking configuration

- Routing and firewall rules

- Cloud services

These abstractions are used in code, which is executed to provision and manage infrastructure. Cloud services and other API-controllable services pair well with IaC.

IaC tools emphasize idempotence: executing the same IaC multiple times yields the same results each time. This is incredibly useful for reasoning about and managing infrastructure, effectively turning architecture into executable code. This code can also be version-controlled, linted, tested, and analyzed by other tools. See also: cattle, not pets (commoditized, repeatable infrastructure).

CI server runs the show: the CI server acts as the pipeline “brain”. It:

- Builds source code

- Runs tests and collects the results (and feeds them to other systems)

- Deploys the software to a running environment

Issue tracker integration: tests and tools identify software defects (including security issues), filter false positives, and create issues in issue trackers.

Artifact manager wears many hats: it stores and serves packages/libraries used during builds, and stores deployable artifacts generated by builds. For example:

- Container images

- Operating system packages (

yum/apt/apk/etc.) - Libraries from RubyGems/PyPi/NuGet/etc.

- Compiled binaries or deployable packages

The artifact manager can apply intelligence to what it manages: scanning container images for vulnerabilities or hard-coded secrets, checking libraries/packages for known vulnerabilities, enforcing licensing restrictions, etc. Some pipelines forego artifact managers, moving build artifacts around directly from the CI server.

Comprehensive monitoring/logging: monitoring and logging occurs throughout the pipeline. This includes the pipeline itself, and deployment environments the pipeline manages. Monitoring and logging applies at multiple layers, such as:

- Application

- Web server

- Runtime environment/middleware

- Operating system

- Network

- Cloud service provider

Tools like Splunk, the ELK stack, and Prometheus collect and aggregate logs, events, and metrics. These tools or a visualization tool (like Grafana) then analyze this and present it in useful forms. This might be a searchable index, dashboards/graphs/charts, or notifications (webhooks, emails, Slack messages, …). The output of monitoring sytems supports daily operations and continuous improvement.

Thanks for reading. If you enjoyed this, check out the next post in the series on DevSecOps strategy.